This Cloud Storage bucket stores the data you use to train Gsutil mb -p $ -c standard -l us-central1 -b on gs:// bucket-name

With the name you want to assign to your bucket. Service- a Cloud Storage bucket using the following command: Note: In the following command, replace bucket-name The command returns a Cloud TPU Service Account with following format: Gcloud beta services identity create -service -project $PROJECT_ID Of the page to allow gcloud to make GCP API calls with your credentials.Ĭreate a Service Account for the Cloud TPU project. The first time you run this command in a new Cloud Shell VM, anĪuthorize Cloud Shell page is displayed. The same region/zone to reduce network latency and network costs.Ĭonfigure Google Cloud CLI to use the project where you want to create VM, your Cloud TPU node and your Cloud Storage bucket in This section provides information on setting up Cloud Storage bucket andĪ Compute Engine VM.

#FINETUNE BERT FREE#

New Google Cloud users might be eligible for a free trial. To generate a cost estimate based on your projected usage, This tutorial uses the following billable components of Google Cloud:

#FINETUNE BERT HOW TO#

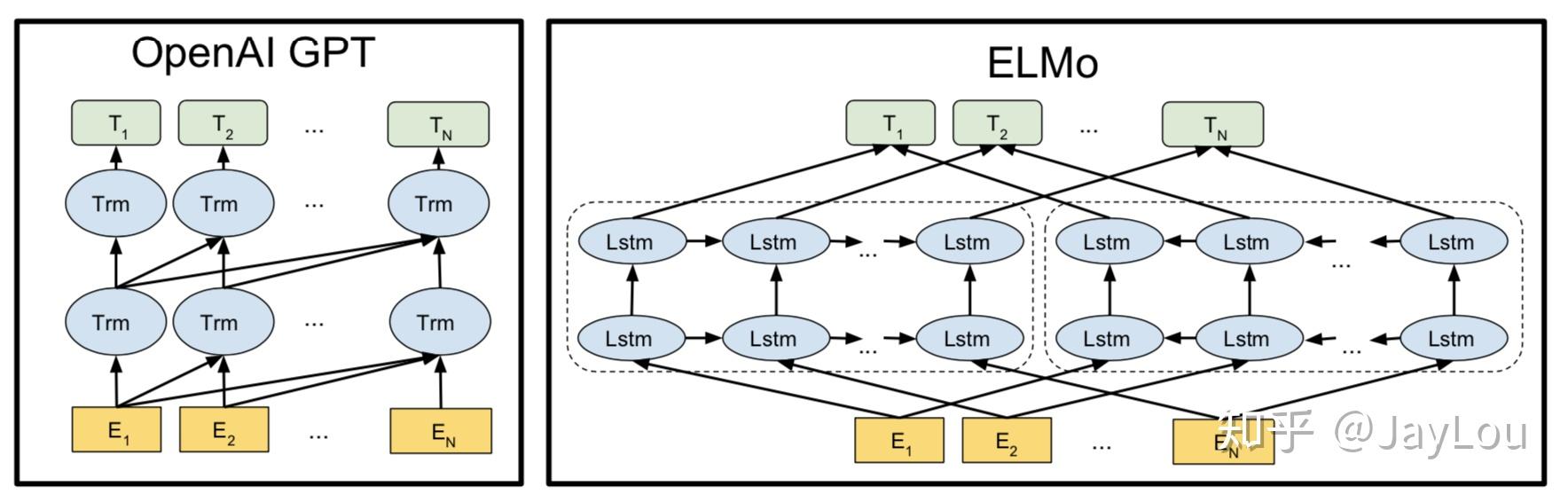

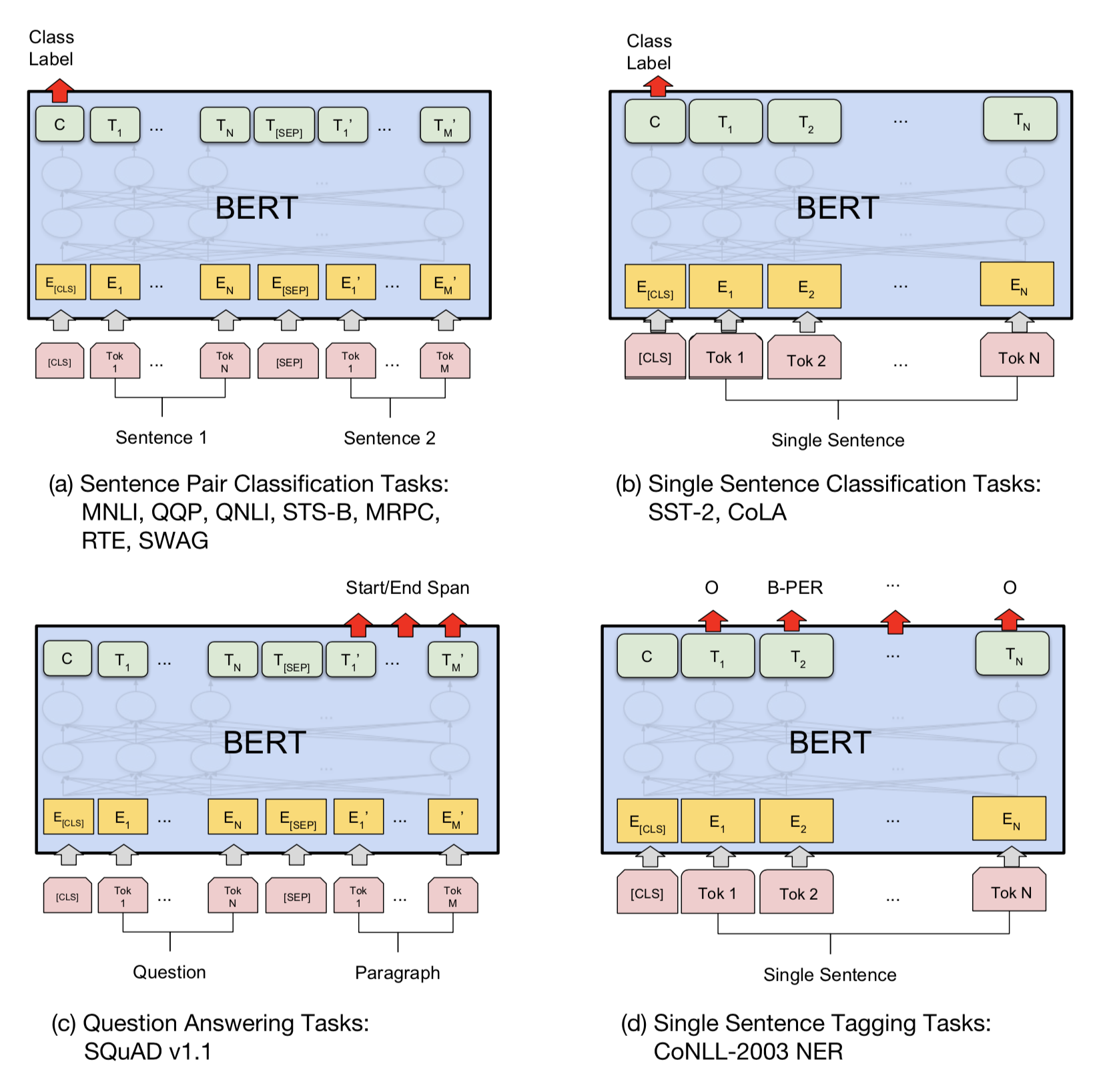

This tutorial shows you how to train the Bidirectional Encoder Representationsįrom Transformers (BERT) model on Cloud TPU.īERT is a method of pre-training language representations. Save money with our transparent approach to pricing

Managed Service for Microsoft Active Directory Rapid Assessment & Migration Program (RAMP) Hybrid and Multi-cloud Application PlatformĬOVID-19 Solutions for the Healthcare Industry

0 kommentar(er)

0 kommentar(er)